|

Hrishikesh Pawar Email | Github | LinkedIn | Resume I am a Computer Vision Engineer at Pixels on Target, where I architect high-performance perception and sensing solutions. My expertise lies in developing robust algorithms for complex environments and optimizing imaging pipelines for real time deployment on resource constrained edge platforms. I completed my Master's in the Robotics Engineering Program at Worcester Polytechnic Institute (WPI). As a Graduate Student Researcher in the Perception and Autonomous Robotics Group, my research centered on compute-efficient autonomous quadrotor navigation using Neuromorphic Vision Sensors. I published Blurring For Clarity at the WACV 2025 workshop, introducing a novel Depth-from-Defocus framework for event cameras that enables low-power obstacle segmentation without traditional depth estimation techniques. For the summer of 2024, I interned at Nobi Smart Lights, in their AI-Perception team where I worked on real time fall detection models and deployment pipelines for smart ceiling lamps designed for elderly care. Prior to my graduate studies, I spent three amazing years at Adagrad AI, a dynamic startup delivering production grade Computer Vision solutions. There, I tackled high impact challenges in real time video analytics, including OCR, license plate recognition, pose estimation, and semantic segmentation. |

|

|

|

|

Worcester Polytechnic Institute

Masters in Robotics Engineering Relevant Coursework: CS-RBE549: Computer Vision, CS541: Deep Learning, RBE595: Vision-Based Robotic Manipulation, RBE550: Motion Planning |

|

|

Smt. Kashibai Navale College of Engineering

Bachelor's in Mechanical Engineering I spent my undergraduate days working in the Combustion and Driverless Formula Student Team designing, building and racing Formula-3 prototype racecars at various national and international competitions. My four-year stint in the team allowed me to implement several concepts from my coursework, building a solid foundation in Mechanical Engineering fundamentals. |

|

|

|

Computer Vision Engineer | July 2025 - Present As a Computer Vision Engineer, I architect high performance perception pipelines and sensing solutions. My work focuses on developing robust sensor fusion frameworks operation in complex environments and optimizing imaging pipelines for deterministic performance on resource constrained tactical edge compute hardware. |

|

Machine Learning Intern | January 2025 - May 2025 As a Machine Learning Intern at TrueLight Energy, I automated energy profile extraction across major ISOs using Optical Character Recognition (OCR), reducing data latency by 50%. I supported this by deploying scalable AWS (EC2) ETL pipelines for high-throughput ingestion, which cut operational costs by 40%. Additionally, I formalized system specifications for the TRUEPrice platform, creating a standardized framework that streamlined cross-team adoption of analytical tools. |

|

AI Software Intern | May 2024 - August 2024 As an AI Software Intern at Nobi, I led the development of smart ceiling lamps focused on real-time fall detection and emergency response for elderly care. I developed a rotation-aware detection model using Swin Transformer backbones, enhancing the model's performance in real-world scenarios. I also worked with vision-language models like LLaVA and CLIP, using LoRA for fine-tuning to improve task generalization. Additionally, I automated the entire deployment pipeline using Jenkins, Kubernetes, and Docker, streamlining the process from model training to real-world application. |

|

Computer Vision Engineer | November 2020 - July 2023 Developed hardware accelerated Computer Vision products addressing crucial real-world problems. Gate-Guard: The focus was on creating an edge-based boom barrier system leveraging Automatic License Plate Recognition (ALPR) for vehicle access control. I was involved in developing data collection pipelines, model training, and deployment tailored to lightweight object detection models like Yolo-X and Yolo-v5, optimized for Nvidia Jetson TX2. Beyond model implementation, I developed interactive analytics and monitoring services using Django, Azure, WebSockets, Kafka, Celery, and Redis to ensure real-time data processing and system scalability. |

|

|

|

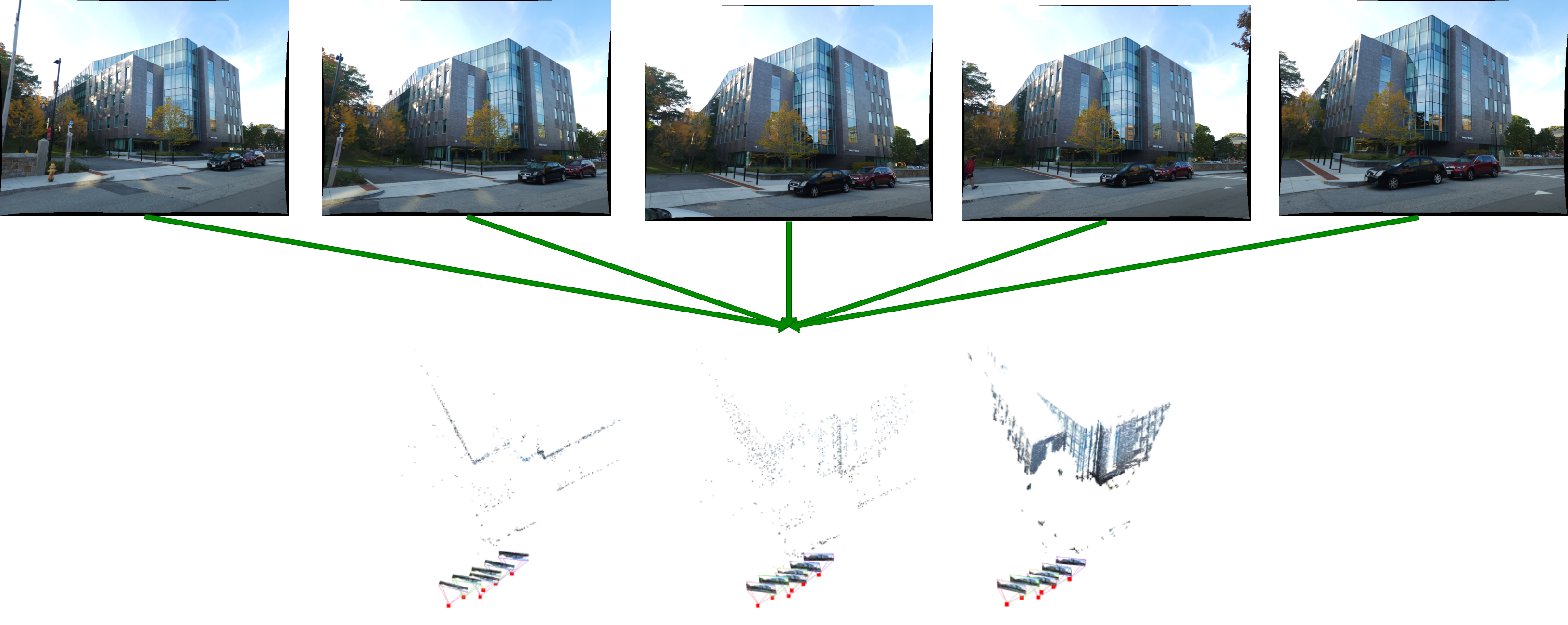

Graduate Student Researcher As part of my research at PeAR Lab, WPI, I developed a Depth-from-Defocus approach using event cameras that extracts sharpness-based depth cues for efficient obstacle segmentation. This enables lightweight navigation in resource-constrained environments. This work was published at EVEGEN Workshop, WACV 2025, where we demonstrated significant improvements in both obstacle segmentation and efficiency over state-of-the-art methods (Depth-Pro, MiDaS, RAFT). In parallel, I've built a custom 3D Gaussian Splats simulator to generate high frame-rate, photorealistic frames and events, supporting Hardware-In-The-Loop (HITL) testing and more realistic evaluation of vision pipelines. I'm currently extending this work towards learning-based navigation in low-light environments, leveraging structured lighting with event cameras, with a strong focus on sim-to-real transfer for real-world deployment. |

|

Graduate Student Researcher Utilising depth images and point clouds to manipulate the waste stream using a robotic arm, and uncover occluded and covered objects |

|

|

|

Hrishikesh Pawar,

Deepak Singh,

Nitin J. Sanket

Passive computation enables efficient aerial robot navigation in cluttered environments by leveraging wave physics to extract depth cues from defocus, eliminating costly explicit depth computation. Using a large-aperture lens on a monocular event camera, our approach optically blurs out irrelevant regions, reducing computational demands effectively achieving 62x savings over state-of-the-art methods, with promising real-world performance. |

|

Implemented the Neural Radiance Fields (NeRF) technique for synthesizing novel views of scenes. This project leverages deep neural networks to model the volumetric scene function, encoding the density and color of points in space as a function of viewing direction and location. |

|

Developed a Classical Structure from Motion (SfM) pipeline to reconstruct 3D structures from sequences of 2D images. This project integrates key techniques such as feature detection, matching, motion recovery, and 3D reconstruction. Utilized essential matrix computation, bundle adjustment, and triangulation methods to accurately estimate 3D points and camera positions, demonstrating the core principles of SfM in computer vision. |

|

Implemented the seminal work of Zhengyou Zhang from scratch to estimate the camera intrinsics and distortion parameters. Used SVD and MLE for estimating the camera calibration parameters. |

|

Implemented Feature Detection followed by Adaptive Non-Maximal Suppression (ANMS) to ensure even distribution of corners across images, enhancing panorama stitching accuracy. Utilized Feature Matching and Robust Homography estimation using RANSAC, further refining match quality. Employed image blending strategies like alpha blending and Poisson blending. |

|

Implemented an edge detector that works by searching for texture, color, and intensity discontinuities across multiple scales. Essentially it is a simpler implementation of Pablo Arbelaez's paper. |

|

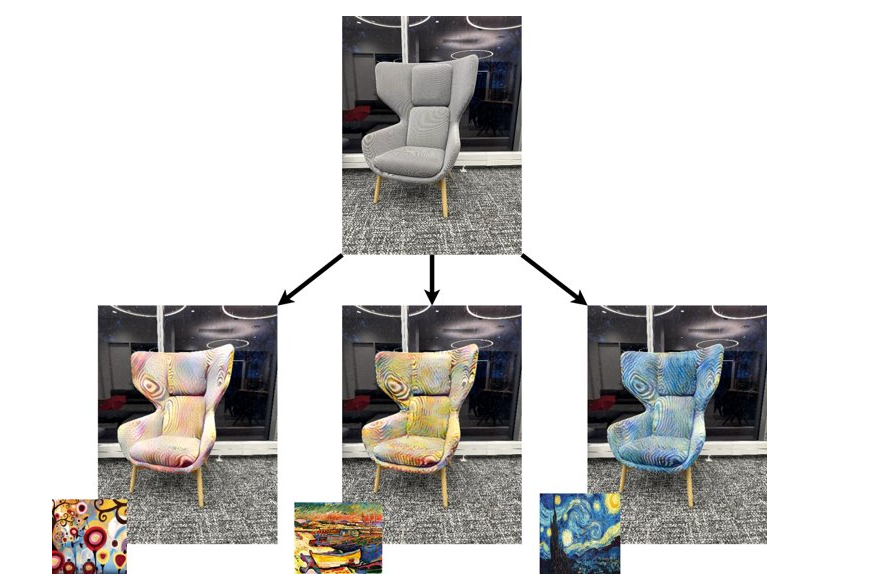

Implemented AdaAttN for diverse style application on images with text-based image segmentation using CLIPSeg. |